How to Use the Opportunity Score to Prioritize Your JTBD Product Roadmap

...Or Maybe Not: A Critical Look at a Popular Prioritization Tool

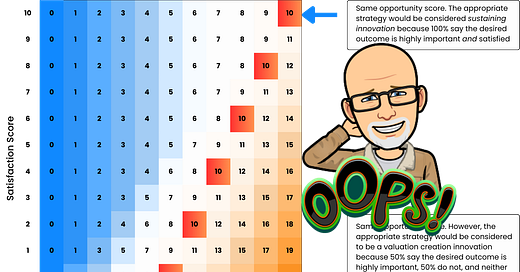

You've heard about the Opportunity Score. It's a popular metric used to identify and prioritize customer needs, and display them on a scatter-plot, promising to guide your product roadmap with data-driven precision.

The premise is enticing: uncover unmet needs, quantify their importance, and focus your efforts where they'll have the biggest impact.

But does the Opportunity Score live up to the hype?

This article will delve into the mechanics of the Opportunity Score, show you how it's calculated, and then reveal some critical flaws that could be leading you astray. We'll also explore more reliable approaches to prioritizing your strategic product roadmap based on real customer needs, including a modified Rank-Sum approach and a method I call Percentages and Ranks, both adapted to utilize a 1-100 prioritization scale derived from traditional 1-5 importance and satisfaction ratings (although I prefer effort over satisfaction).

Step 1:

Make Your Data Accessible

Before we dive into prioritiz…

Keep reading with a 7-day free trial

Subscribe to The Practical Innovator's Guide to Customer-Centric Growth to keep reading this post and get 7 days of free access to the full post archives.